Introduction

SaaS RS is a code generator for gRPC backends written in the Rust programming language. It transforms your Git repository into a highly-opinionated time-saving Managed Rust Workspace.

It coordinates changes to gRPC Protobuf files and their corresponding Rust code, and generates stubs where business logic or tests should be inserted.

SaaS RS is primarily used via its CLI, which draws inspiration from the Ruby on Rails, Ember.js, and Claude Code CLIs. It uses the MVVM design pattern required by gRPC, and includes an advanced cloud-native storage layer for your domain objects, object storage, and session storage, with support for a wide range of attached storage providers.

sequenceDiagram

actor CLI

rect rgb(240, 240, 240)

CLI ->>+ api.saas-rs.com: $ saas-rs generate resource invoice --service user --version 1

api.saas-rs.com ->>+ CLI: (workspace updated with new model protos, storage bucket, and Rust code)

end

Quick Start

Installation

Once you've installed Rust, install the CLI with:

cargo install saas-rs-cli

Login

To begin using the CLI, you first need to login to the SaaS RS API service running at https://api.saas-rs.com.

The login command is used like this:

$ saas-rs login

Logged in. Greetings David Rauschenbach!

If you have a favorite browser, you can login like this:

$ saas-rs login --browser chrome

After a successful login, a 24-hour session token gets cached in a config file, and is used to authorize subsequent CLI operations. This config file is located here:

~/.saas-rs/config(Unix)%APPDATA%\saas-rs\config(Windows)

Initialize your Git Repo

A SaaS RS Managed Rust Workspace must first be initialized with the init command. It establishes the requisite

workspace layout, and defines your brand which will be used to namespace your gRPC protocols.

Use the init command to initialize your empty Git repo:

saas-rs init --brand acme

The following Rust workspace is laid out for you:

crates/

├── common/

├── config_store/

├── object_store/

└── session_store/

.rustfmt.toml

.saas-rs.toml

CHANGELOG.md

Cargo.lock

Cargo.toml

Makefile

README.md

After running the init command, you'll notice that the CLI will indicate that your git workspace is now dirty, and

that this would be a good time to run make and to then commit the generated changes before modifying anything.

$ saas-rs init --brand acme

Response received

Patch applied to local workspace

Workspace is dirty; now would be a good time to run `make` and then commit

$ make

cargo fmt --all

cargo build

Updating crates.io index

Locking 301 packages to latest compatible versions

Adding matchit v0.8.4 (available: v0.8.6)

Adding prost v0.13.5 (available: v0.14.1)

Compiling common v0.1.0 (/Users/davidr/workspaces/foo/crates/common)

Compiling config-store v0.1.0 (/Users/davidr/workspaces/foo/crates/config_store)

Compiling object-store v0.1.0 (/Users/davidr/workspaces/foo/crates/object_store)

Compiling session-store v0.1.0 (/Users/davidr/workspaces/foo/crates/session_store)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs init --brand acme"

Enable MongoDB Support

Your SaaS RS Managed Workspace supports 3 storage adapters:

- the ConfigStore is your primary store that holds Account records and all your other business domain managed objects

- the ObjectStore can store files and large blobs, if you need that

- the SessionStore holds temporary things like login sessions or shopping carts, and frequently accessed things like API keys

To configure your workspace to support MongoDB, perform:

$ saas-rs enable storage-provider MongoDB

You will notice the following files have been changed in your workspace:

crates/

├── config_store/

│ ├── src/

│ │ ├── factory.rs

├── object_store/

│ ├── src/

│ │ ├── factory.rs

Cargo.toml

MongoDB support includes ConfigStore and ObjectStore adapters, that can be configured like this:

CONFIG_STORE_URL=mongodb://myhost:27017/saas-rs-prodOBJECT_STORE_URL=mongodb://myhost:27017/saas-rs-objects-prod

Perform make and then stage and commit the generated files before you make any further changes:

$ make

cargo fmt --all

cargo build

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs enable storage-provider MongoDB"

Command Line Interface

The SaaS RS CLI is the primary way to use the SaaS RS API at https://api.saas-rs.com.

The following sections elaborate on usage of it various commands.

- init — Initialize a Git repository with a new managed Rust workspace

- enable — Perform code changes to enable certain feature flags

- generate — Run one of the code generators for models, resources, controllers, etc

The init command

A SaaS RS Managed Rust Workspace must be first initialized with the init command. It establishes the requisite

workspace layout, and also defines your brand which will be used to namespace your gRPC protocols.

The init command is used like this:

saas-rs init --brand acme

The following Rust workspace is laid out for you:

proto/

crates/

├── common/

├── config_store/

├── object_store/

└── session_store/

.rustfmt.toml

.saas-rs.toml

CHANGELOG.md

Cargo.lock

Cargo.toml

Makefile

README.md

- The

commoncrate is where you can place shared code. This will include your brand, which can be found incommon/src/consts/mod.rsas:pub const BRAND: &str = "acme"; - The

config_storecrate defines metadata and factory functions for your primary storage. Actual storage adapters are defined in the open source saas-rs-sdk crate. - The

object_storecrate defines metadata and factory functions for your object storage. This could come in handy if your SaaS will need to manage file uploads on behalf of your users, or other large objects. - The

session_storecrate defines metadata and factory functions for your high-performance session storage. The session store is a good place to store short-lived session tokens, long-lived API Keys, or transient Stripe Checkout records.

After running the init command, you'll notice that the CLI will indicate that your git workspace is now dirty, and

that this would be a good time to run make and to then commit the generated changes before modifying anything.

$ saas-rs init --brand acme

Response received

Patch applied to local workspace

Workspace is dirty; now would be a good time to run `make` and then commit

$ make

cargo fmt --all

cargo build

Updating crates.io index

Locking 301 packages to latest compatible versions

Adding matchit v0.8.4 (available: v0.8.6)

Adding prost v0.13.5 (available: v0.14.1)

Compiling common v0.1.0 (/Users/davidr/workspaces/foo/crates/common)

Compiling config-store v0.1.0 (/Users/davidr/workspaces/foo/crates/config_store)

Compiling object-store v0.1.0 (/Users/davidr/workspaces/foo/crates/object_store)

Compiling session-store v0.1.0 (/Users/davidr/workspaces/foo/crates/session_store)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs init --brand acme"

Multi-Language Monorepos

If your git repository will need to hold code in other languages, the Rust workspace can be initialized as a subdirectory. In this hypothetical example, a Rust workspace and its Python language binding co-exist within the same git repo.

proto/

python/

rust/

└── crates/

├── common/

├── config_store/

├── object_store/

└── session_store/

.rustfmt.toml

CHANGELOG.md

Cargo.lock

Cargo.toml

Makefile

README.md

.saas-rs.toml

In such a situation, the init command is used like this:

$ saas-rs init --brand acme --path rust

Enable a feature

The enable command performs code changes to enable certain feature flags.

- identity-provider — enables support for one or more of the available IdP adapters

- payment-provider — enables support for one or more of the available payment provider adapters

- storage-provider — enables support for one or more of the available storage providers

Enabling an Identity Provider

The enable identity-provider command configures your workspace to make use of one of the pre-built open source

identity provider adapters in the saas-rs-sdk crate (see the

src/authentication/handlers folder).

Run this command multiple times to enable all the IdPs you intend to support.

Add Google IdP support

To configure your workspace to support Google logins, perform:

$ saas-rs enable identity-provider Google

You will notice the following files have been changed in your workspace:

Cargo.toml

A closer examination of Cargo.toml will show that the saas-rs-sdk crate has had an authentication-google feature

flag added:

[workspace.dependencies]

saas-rs-sdk = { version = "...", features = ["...", "authentication-google"] }

Perform make and then stage and commit the generated files before you make any further changes:

$ make

cargo fmt --all

cargo build

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs enable identity-provider Google"

Show all IdPs

To see a list of all the currently supported identity providers, run the command with a help argument like this:

$ saas-rs enable identity-provider --help

Usage: saas-rs enable identity-provider <provider>

Arguments:

<provider> The identity provider [possible values: Amazon, Apple, DigitalOcean, Facebook, GitHub, GitLab, Google,

Instagram, Linode, Microsoft, Okta, Twitter]

Options:

-h, --help Print help

Enabling a Payment Provider

The enable payment-provider command configures your workspace to make use of one of the pre-built open source

payment provider adapters in the saas-rs-sdk crate (see the

src/payments/adapters folder).

Enable a Payment Provider after generating support for the Service Broker feature. This will enable you to synchronize your Service Plan records and their pricing with it.

Add Stripe support

To configure your workspace to support Stripe:

$ saas-rs enable payment-provider Stripe

You will notice the following files have been changed in your workspace:

Cargo.toml

A closer examination of Cargo.toml will show that the saas-rs-sdk crate has had an payments-stripe feature

flag added:

[workspace.dependencies]

saas-rs-sdk = { version = "...", features = ["...", "payments-stripe"] }

Perform make and then stage and commit the generated files before you make any further changes:

$ make

cargo fmt --all

cargo build

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs enable payment-provider Stripe"

Show all Payment Providers

To see a list of all the currently supported identity providers, run the command with a help argument like this:

$ saas-rs enable payment-provider --help

Usage: saas-rs enable payment-provider <provider>

Arguments:

<provider> The payment provider [possible values: Stripe]

Options:

-h, --help Print help

Enabling a Storage Provider

The enable storage-provider command configures your workspace to make use of one of the

pre-built open source storage adapters in the saas-rs-sdk crate (see the

src/storage folder).

Run this command multiple times to enable all the storage providers you intend to support.

Enabling MongoDB Support

To configure your workspace to support MongoDB, perform:

$ saas-rs enable storage-provider MongoDB

You will notice the following files have been changed in your workspace:

crates/

├── config_store/

│ ├── src/

│ │ ├── factory.rs

├── object_store/

│ ├── src/

│ │ ├── factory.rs

Cargo.toml

A closer examination of Cargo.toml will show that the saas-rs-sdk crate has had a storage-mongodb feature flag added,

providing your workspace with access to all MongoDB-related adapters and support libraries.

[workspace.dependencies]

saas-rs-sdk = { version = "...", features = ["...", "storage-mongodb"] }

And a closer examination of config_store/src/factory.rs will show that support has been added for MongoDB URLs:

Ok(match url.scheme() {

...

"mongodb" | "mongodb+srv" => {

let index_models_by_bucket = std::collections::HashMap::new(); // TODO

Arc::new(

saas_rs_sdk::storage::config_store::adapters::mongodb::MongodbConfigStore::new(

url,

app_name,

belongs_tos_by_bucket,

has_manys_by_bucket,

index_models_by_bucket,

)

.await

.map_err(|e| Status::internal(e.to_string()))?,

)

}

...

})Perform make and then stage and commit the generated files before you make any further changes:

$ make

cargo fmt --all

cargo build

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs enable storage-provider MongoDB"

Enabling Redis Support

To configure your workspace to support Redis, perform:

$ saas-rs enable storage-provider Redis

You will notice the following files have been changed in your workspace:

crates/

├── session_store/

│ ├── src/

│ │ ├── factory.rs

Cargo.toml

A closer examination of Cargo.toml will show that the saas-rs-sdk crate has had a storage-redis feature flag added,

providing your workspace with access to all Redis-related adapters and support libraries.

[workspace.dependencies]

saas-rs-sdk = { version = "0.2.7", features = ["...", "storage-redis"] }

And a closer examination of session_store/src/factory.rs will show that support has been added for Redis URLs:

Ok(match url.scheme() {

...

"redis" | "redis-cluster" | "redis-sentinel" | "redis+unix" => Arc::new(

saas_rs_sdk::storage::session_store::adapters::redis::RedisSessionStore::new(

url,

app_name,

belongs_tos_by_bucket,

has_manys_by_bucket,

)

.await?,

),

...

})Perform make and then stage and commit the generated files before you make any further changes:

$ make

cargo fmt --all

cargo build

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs enable storage-provider Redis"

Show all Storage Providers

To see a list of all the currently supported storage providers, run the command with a help argument like this:

$ saas-rs enable storage-provider --help

Usage: saas-rs enable storage-provider <provider>

Arguments:

<provider> The storage provider [possible values: LocalFileSystem, Memory, MongoDB, Postgres, Redis, S3]

Options:

-h, --help Print help

generate

The generate command, just like in Rails or the Ember CLI, is the primary code generator of new things.

sequenceDiagram

actor CLI

CLI ->>+ api.saas-rs.com: Generator Request + git archive.zip

api.saas-rs.com ->>+ CLI: Generator Response + git patch

- service — generates a new gRPC service

- model — generates a new view model

- resource — generates a new resource, which is a model plus its operations

- controller — generates a new controller, which implements the resource functions

- feature — generates a pre-built feature into the existing codebase

The generate service command

The generate service command generates code that:

- defines a new network service

- implements the server-side handling of the network protocol

- builds the binary

- demonstrates some minimal integration testing and acceptance testing for the new service

The generate service command is used like this:

$ saas-rs generate service user

The following content is added to the Rust workspace for you:

crates/

├── protocol/

│ ├── src/

│ │ ├── lib.rs

│ │ └── generated/

│ │ ├── acme_user_v1.rs

│ │ └── acme_user_v1_serde.rs

│ ├── Cargo.toml

│ └── build.rs

├── user_server/

│ ├── debian/

│ │ └── service

│ ├── src/

│ │ ├── consts/

│ │ │ ├── env_vars.rs

│ │ │ └── mod.rs

│ │ ├── v1/

│ │ │ ├── controllers/

│ │ │ │ ├── account.rs

│ │ │ │ ├── authentication.rs

│ │ │ │ ├── linked_account.rs

│ │ │ │ └── mod.rs

│ │ │ └── middlewawre/

│ │ │ ├── authorization.rs

│ │ │ └── mod.rs

│ │ ├── lib.rs

│ │ └── main.rs

│ ├── tests/

│ │ ├── integration_config_store_accounts.rs

│ │ ├── integration_config_store_linked_accounts.rs

│ │ └── testsupport.rs

│ └── Cargo.toml

proto/

└── acme/

└── user/

└── v1/

├── access_token.proto

├── access_token_resource.proto

├── account.proto

├── account_resource.proto

├── error.proto

├── linked_account.proto

├── linked_account_resource.proto

└── user_service.proto

The following folders have the following purpose:

- proto - This folder defines your network protocols, including user-facing, admin-facing, and machine-facing services.

The contents consist of Protobuf files containing both view models (over-the-wire models) and gRPC service

definitions. The main gRPC service definition file is

user_service.proto. - crates/protocol - This folder contains your network protocols, in Rust form. These Rust files are generated by Tonic

and Prost, and are derived from the proto files. In the

generated/folder, theacme_user_v1.rsmodule holds the generated structs for your view models and their resource actions, while theacme_user_v1_serde.rsmodule holds pbjson support so that every Protobuf message struct can be serialized to JSON and BSON using camelCased keys. - crates/user_server - this folder contains your new gRPC service endpoint. It compiles into a stand-alone binary that

runs with

cargo run --bin acme-user-server, and is also made available in library form via thelib.rsmodule, in case you want to embed it elsewhere if you want to combine user-facing, admin-facing, and machine-to-machine-facing gRPC services in a single all-inclusive binary such asacme-server.

As always, perform make and then stage and commit the generated files before you start making changes:

$ make

cargo fmt --all

cargo build

Compiling protocol v0.1.0 (/Users/davidr/workspaces/foo/crates/protocol)

Compiling acme-user-server v0.1.0 (/Users/davidr/workspaces/foo/crates/user_server)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 1.46s

Finished `dev` profile [unoptimized + debuginfo] target(s) in 2.73s

$ git add -A

$ git commit -m "saas-rs generate service user"

Publishing a new v2 of your protocol

To publish a new v2 version of your protocol, the generate service command is used like this:

$ saas-rs generate service user --version 2

It is up to you to decide how to implement the new protocol. One common strategy is to create v2 controllers that initially delegate to the old v1 controllers, and are then customized as needed.

You would typically leave the v1 controllers in your code for at least a few years while you give your customers (including your web developers) ample time to migrate off of the v1 protocol.

Running your new server

To run the server that implements your new "acme user v1" network service, a Config Store adapter is required. Configuration of a Config Store is explained elsewhere, so for now you can launch your server with the built-in in-memory ConfigStore adapter:

$ CONFIG_STORE_URL=memory://localhost cargo run --bin acme-user-server

Compiling protocol v0.1.0 (/Users/davidr/workspaces/foo/crates/protocol)

Compiling acme-user-server v0.1.0 (/Users/davidr/workspaces/foo/crates/user_server)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 1.39s

Running `target/debug/acme-user-server`

[2025-07-17T18:01:00Z INFO acme_user_server] Attached to config store config_store_url=memory://localhost

[2025-07-17T18:01:00Z INFO acme_user_server] Attached to session store session_store_url=redis://localhost:6379/8

[2025-07-17T18:01:00Z INFO acme_user_server] Attached to object store object_store_url=mongodb://localhost:27017/acme

[2025-07-17T18:01:00Z INFO acme_user_server] Created admin account

[2025-07-17T18:01:00Z INFO acme_user_server] Listening on 0.0.0.0:3000 for http

Or to run on a non-default port, use Foreman / Heroku / Dokku / CloudFoundry semantics:

$ PORT=1234 CONFIG_STORE_URL=memory://localhost cargo run --bin acme-user-server

...

[2025-07-17T18:04:18Z INFO acme_user_server] Listening on 0.0.0.0:1234 for http

Integration and Acceptance Testing

To perform integration testing and acceptance testing, ConfigStore and SessionStore adapters must be defined. Otherwise the tests will skip during testing, and only unit tests will run.

Integration and Acceptance tests can be invoked using in-memory storage adapters with:

$ export TEST_CONFIG_STORE_URL=memory://localhost

$ export TEST_SESSION_STORE_URL=memory://localhost

$ make check

Running tests/integration_config_store_accounts.rs (target/debug/deps/integration_config_store_accounts-97dc931ee3e81b10)

running 6 tests

test cannot_delete_non_existent_account ... ok

test cannot_find_non_existent_account ... ok

test cannot_update_non_existent_account ... ok

test can_create_account_with_caller_assigned_id ... ok

test can_create_account_and_auto_assign_id ... ok

test can_perform_crud_operations ... ok

Running tests/acceptance_login_with_github.rs (target/debug/deps/acceptance_login_with_github-ea31e740e423d74c)

running 1 test

test can_login_for_the_first_time ... ok

...

Debian APT Packaging

A crates/user_server/debian/service file is also generated, which you can fill out in greater detail if you want

to package your new service with cargo-deb and publish it to a Debian APT

repository such as Deb Stash. You can do so on Linux with:

$ cargo install cargo-deb

$ cargo deb -p acme-user-server

The generate model command

The generate model command generates a Protobuf message that will act as a "view model", being the representation of

a record transferred over the wire to your gRPC network service, and a bucket definition for storage.

Model records must be identified by a string-based surrogate key, such as an XID or UUID, to be compatible with modern scale-out storage systems.

The generate model command is used like this:

$ saas-rs generate model invoice --service user --version 1 customer_id address_1 address_2 city state zip postal_code country_iso2

$ make

$ git add -A

$ git commit -m "saas-rs generate model invoice --service user --version 1 customer_id address_1 address_2 city state zip postal_code country_iso2"

The following content is added or changed in your Rust workspace:

crates/

├── config_store/

│ ├── src/

│ │ └── bucket.rs

├── protocol/

│ ├── src/

│ │ └── generated/

│ │ ├── acme_user_v1.rs

│ │ └── acme_user_v1_serde.rs

proto/

└── acme/

└── user/

└── v1/

├── invoice.proto

└── user_service.proto

An examination of the proto/acme/user/v1/invoice.proto file shows the generated view model:

syntax = "proto3";

package acme.user.v1;

import "google/protobuf/timestamp.proto";

message Invoice {

string id = 1;

string customer_id = 2;

string address_1 = 3;

string address_2 = 4;

string city = 5;

string state = 6;

string zip = 7;

string postal_code = 8;

string country_iso2 = 9;

google.protobuf.Timestamp created_at = 1000;

optional string created_by_account_id = 1001;

optional google.protobuf.Timestamp deleted_at = 1002;

optional string deleted_by_account_id = 1003;

optional google.protobuf.Timestamp updated_at = 1004;

optional string updated_by_account_id = 1005;

oneof owner {

string owner_account_id = 1006;

}

}

And for this new Protobuf file to be found by the Prost code generator, it needs to be referenced by the

proto/acme/user/v1/user_sevice.proto file:

import "acme/user/v1/invoice.proto";

Also notice that a Bucket enum variant was added in crates/config_store/src/bucket.rs, which helps the storage layer

understand that this bucket represents:

- a new table, if you'll be using an RDBMS based ConfigStore

- a new collection, if you'll be using a Document based ConfigStore

- or new a key prefix, if you'll be using a KV based ConfigStore

pub enum Bucket {

#[strum(serialize = "invoices")]

Invoices,

...

}Storage models

View models are translated into storage models before being used with a storage adapter. Storage models are general-purpose BSON documents instead of concrete structs, so that you don't have to redundantly define both view models and storge models. View models are converted to/from storage models with help from the pbbson crate. Storage models will be elaborated in another section.

Compound model names

It's perfectly valid to define a new model with a compound name, such as LinkedAccount or InvoiceChangeAction.

When the generate model command is used like this:

$ saas-rs generate model invoice-change-action --service user --version 1

The model filename is automatically snake-cased to:

proto/acme/user/v1/invoice_change_action.proto

And the bucket enum variant's strum serialize attribute defines a camel-cased + pluralized bucket name for KV and Document stores, while the enum variant identifier itself is pascal-cased + pluralized:

pub enum Bucket {

#[strum(serialize = "invoiceChangeActions")]

InvoiceChangeActions,

...Making Further Changes

The fields initially generated are completely customizable. Only the id and audit fields are expected to remain

unaltered. So there's no difference between invoking the generate model command with a complete field list, or

invoking it with an empty list and typing in fields by hand.

Some common changes to models include:

- setting fields as

optional - tweaking the datatypes of the generated fields

- adding additional audit fields, such as

last_viewed_byandlast_viewed_at, orapproved_byandapproved_at

The generate resource command

The generate resource command generates a model, plus its operations, including

CRUD operations and whatever else.

The command is used with arguments identical to the generate model command, like this:

$ saas-rs generate resource invoice --service user --version 1 customer_id address_1 address_2 city state zip postal_code country_iso2

$ make

$ git add -A

$ git commit -m "saas-rs generate resource invoice --service user --version 1 customer_id address_1 address_2 city state zip postal_code country_iso2"

The following content is added or changed in your Rust workspace:

crates/

├── config_store/

│ ├── src/

│ │ └── bucket.rs

├── protocol/

│ ├── src/

│ │ └── generated/

│ │ ├── acme_user_v1.rs

│ │ └── acme_user_v1_serde.rs

├── user_server/

│ ├── src/

│ │ └── v1/

│ │ └── mod.rs

│ └── tests/

│ └── integration_config_store_invoices.rs

proto/

└── acme/

└── user/

└── v1/

├── invoice.proto

├── invoice_resource.proto

└── user_service.proto

An examination of the proto/acme/user/v1/invoice_resource.proto file shows the generated default CRUD operations:

syntax = "proto3";

package acme.user.v1;

import "google/protobuf/field_mask.proto";

import "acme/user/v1/error.proto";

import "acme/user/v1/invoice.proto";

message InvoiceFilter {

optional string id = 1;

}

message CreateInvoiceRequest {

Invoice invoice = 1;

}

message CreateInvoiceResponse {

Invoice invoice = 1;

}

message DeleteInvoiceRequest {

string id = 1;

}

message DeleteInvoiceResponse {

}

message FindInvoiceRequest {

string id = 1;

}

message FindInvoiceResponse {

Invoice invoice = 1;

}

message FindManyInvoicesRequest {

InvoiceFilter filter = 1;

google.protobuf.FieldMask field_mask = 2;

optional uint32 offset = 3;

optional uint32 limit = 4;

}

message FindManyInvoicesResponse {

repeated Invoice invoices = 1;

}

message UpdateInvoiceRequest {

Invoice invoice = 1;

}

message UpdateInvoiceResponse {

Invoice invoice = 1;

}

message ValidateInvoiceRequest {

Invoice invoice = 1;

bool existing = 2;

}

message ValidateInvoiceResponse {

repeated ErrorObject errors = 1;

}

And for this new Protobuf file to be found by the Prost code generator, it needs to be referenced by the

proto/acme/user/v1/user_sevice.proto file:

import "acme/user/v1/invoice_resource.proto";

An examination of the main gRPC service implementation file at crates/user_server/src/mod.rs shows the rpc stubs

that were generated with Not Implemented Yet placeholders to ensure your workspace compiles:

impl User for UserGrpcServerV1 {

...

async fn create_invoice(

&self,

_req: Request<CreateInvoiceRequest>,

) -> Result<Response<CreateInvoiceResponse>, Status> {

todo!("NIY")

}

async fn delete_invoice(

&self,

_req: Request<DeleteInvoiceRequest>,

) -> Result<Response<DeleteInvoiceResponse>, Status> {

todo!("NIY")

}

async fn find_invoice(&self, _req: Request<FindInvoiceRequest>) -> Result<Response<FindInvoiceResponse>, Status> {

todo!("NIY")

}

async fn find_many_invoices(

&self,

_req: Request<FindManyInvoicesRequest>,

) -> Result<Response<FindManyInvoicesResponse>, Status> {

todo!("NIY")

}

async fn update_invoice(

&self,

_req: Request<UpdateInvoiceRequest>,

) -> Result<Response<UpdateInvoiceResponse>, Status> {

todo!("NIY")

}

async fn validate_invoice(

&self,

_req: Request<ValidateInvoiceRequest>,

) -> Result<Response<ValidateInvoiceResponse>, Status> {

todo!("NIY")

}

...

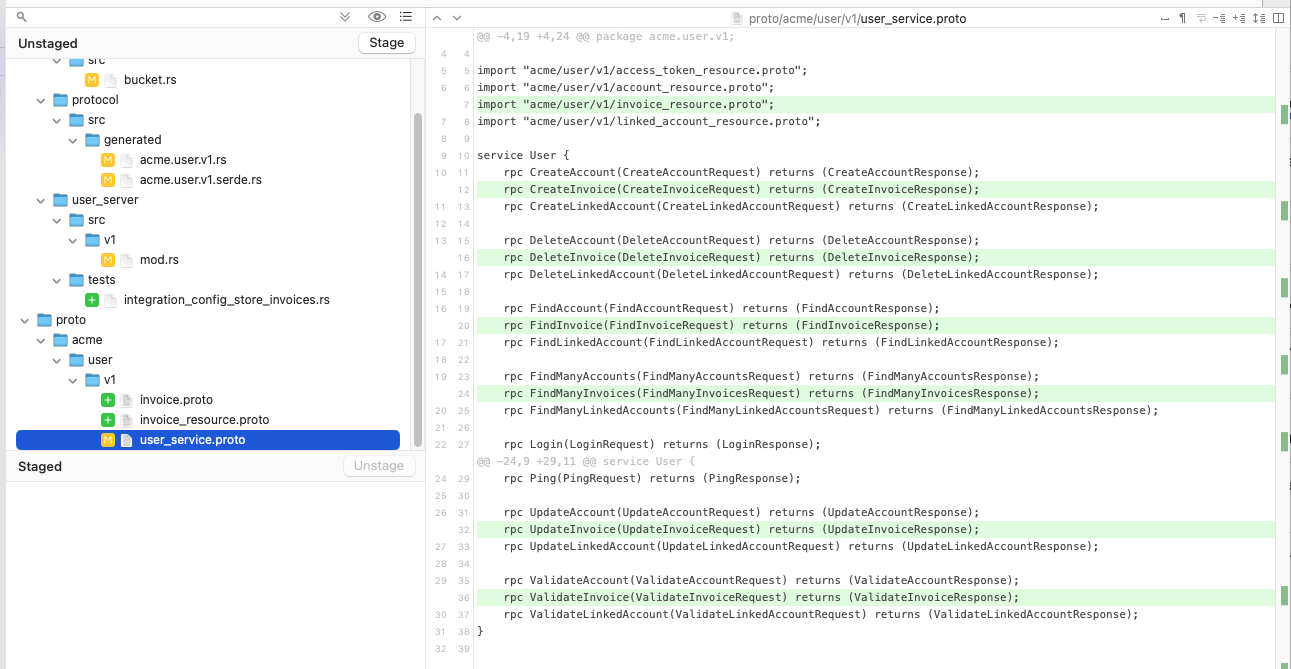

}A Fork based graphical diff view does the best job of showing the changes that were interleaved

into the proto/acme/user/v1/user_service.proto file:

Making Further Changes

The default CRUD code that was generated is just a starting point, and you are free to make changes to customize things to your liking. For example, you might:

- Add new operations that are above and beyond the usual CRUD operations

- Customize the pagination mechanism used by the find many operation

- Customize the fields that can be filtered on during find many operations

- Remove Delete and Update operations for resources that will be read-only, such as static lookup tables. The SaaS RS

CLI does this for the

saas-rs list generatorscommand, which returns a static list ofGeneratorrecords:

$ saas-rs list generators

┌──────────────────────┬─────────┬────────────────┬─────────────────────────────────┐

│ id ┆ type ┆ name ┆ description │

│ --- ┆ --- ┆ --- ┆ --- │

│ str ┆ str ┆ str ┆ str │

╞══════════════════════╪═════════╪════════════════╪═════════════════════════════════╡

│ d18nptv1t0sfku8vjl00 ┆ Feature ┆ api-keys ┆ Adds API Key management and au… │

│ d126jjn1t0s8usbjajpg ┆ Feature ┆ file-transfer ┆ Adds file upload+download capa… │

│ d1bc0dv1t0sda0dg0la0 ┆ Feature ┆ issue-tracking ┆ Adds issue tracking support to… │

│ d1bc2uf1t0sdd7jbftmg ┆ Feature ┆ service-broker ┆ Adds service broker support to… │

└──────────────────────┴─────────┴────────────────┴─────────────────────────────────┘

The generate controller command

The generate controller command is typically used after generating a resource, in order to replace

a resource's Not Implemented Yet stubs with a default implementation.

When it is used like this:

$ saas-rs generate controller invoice --service user --version 1

$ make

$ git add -A

$ git commit -m "saas-rs generate controller invoice --service user --version 1"

The following content is added or changed in your Rust workspace:

crates/

└── user_server/

└── src/

└── v1/

├── controllers/

│ ├── invoice.rs

│ └── mod.rs

└── mod.rs

And the following things occurred:

- in

crates/user_server/src/v1/mod.rs, the main service implementation functions for the invoice resource were rewritten, replacing any former function body with a new line that delegates to a function of the same name in the controller module - in

crates/user_server/src/v1/controllers/invoice.rs, default CRUD handling functions were generated

An examination of the crates/user_server/src/v1/mod.rs shows the service functions that were rewritten to now

delegate to the new controller module:

impl User for UserGrpcServerV1 {

...

async fn create_invoice(&self, req: Request<CreateInvoiceRequest>) -> Result<Response<CreateInvoiceResponse>, Status> {

controllers::invoice::create(self.app_state.clone(), req).await

}

async fn delete_invoice(&self, req: Request<DeleteInvoiceRequest>) -> Result<Response<DeleteInvoiceResponse>, Status> {

controllers::invoice::delete(self.app_state.clone(), req).await

}

...

}An examination of the crates/user_server/src/v1/controllers/invoice.rs module shows the default implementation for

CRUD handling:

use crate::v1::{validation_errors, AppState};

use bson::{doc, DateTime};

use common::metadata::require_authorization;

use config_store::Bucket;

use log::debug;

use protocol::acme::user::v1::{

invoice, CreateInvoiceRequest, CreateInvoiceResponse, DeleteInvoiceRequest, DeleteInvoiceResponse,

FindInvoiceRequest, FindInvoiceResponse, FindManyInvoicesRequest, FindManyInvoicesResponse, Invoice,

UpdateInvoiceRequest, UpdateInvoiceResponse, ValidateInvoiceRequest, ValidateInvoiceResponse,

};

use saas_rs_sdk::pbbson::Model;

use saas_rs_sdk::storage::config_store::FindOptions;

use std::sync::Arc;

use tonic::{Request, Response, Status};

pub async fn create(

app_state: Arc<AppState>,

req: Request<CreateInvoiceRequest>,

) -> Result<Response<CreateInvoiceResponse>, Status> {

let current_account_id = require_authorization(&req)?;

let mut invoice = req.into_inner().invoice.unwrap();

invoice.created_by_account_id = Some(current_account_id.clone());

invoice.owner = Some(invoice::Owner::OwnerAccountId(current_account_id));

// Store

invoice = app_state.config_dao.create(Bucket::Invoices, &invoice).await?;

// Return result

Ok(Response::new(CreateInvoiceResponse { invoice: Some(invoice) }))

}

pub async fn delete(

app_state: Arc<AppState>,

req: Request<DeleteInvoiceRequest>,

) -> Result<Response<DeleteInvoiceResponse>, Status> {

// Authorize

let current_account_id = require_authorization(&req)?;

// Find

let req = req.into_inner();

let _existing: Invoice = app_state.config_dao.find(Bucket::Invoices, &req.id).await?;

// Check access

// check_can_write(self, &existing, ¤t_account_id).await?;

// Delete

app_state.config_dao.delete(Bucket::Invoices, &req.id, Some(current_account_id)).await?;

// Return result

Ok(Response::new(DeleteInvoiceResponse {}))

}

pub async fn find_one(

app_state: Arc<AppState>,

req: Request<FindInvoiceRequest>,

) -> Result<Response<FindInvoiceResponse>, Status> {

let id = req.into_inner().id.clone();

let invoice: Invoice = app_state.config_dao.find(Bucket::Invoices, &id).await?;

Ok(Response::new(FindInvoiceResponse { invoice: Some(invoice) }))

}

pub async fn find_many(

app_state: Arc<AppState>,

req: Request<FindManyInvoicesRequest>,

) -> Result<Response<FindManyInvoicesResponse>, Status> {

let req = req.into_inner();

let filter = Model::from({

let req_filter = req.filter;

let mut filter = doc! {"deletedAt": None::<DateTime>};

if let Some(req_filter) = req_filter {

if let Some(ref id) = req_filter.id {

filter.insert("id", id.clone());

}

//if let Some(ref owner_account_id) = req_filter.owner_account_id {

// filter.insert("ownerAccountId", owner_account_id.clone());

//}

}

filter

});

let invoices: Vec<Invoice> = app_state.config_dao.find_many(Bucket::Invoices, FindOptions::new().with_filter(filter)).await?;

Ok(Response::new(FindManyInvoicesResponse { invoices }))

}

pub async fn update(

app_state: Arc<AppState>,

req: Request<UpdateInvoiceRequest>,

) -> Result<Response<UpdateInvoiceResponse>, Status> {

// Authorize

let current_account_id = require_authorization(&req)?;

// Find existing

let req_metadata = req.metadata().clone();

let req_invoice = req.into_inner().invoice.unwrap();

let existing: Invoice = app_state.config_dao.find(Bucket::Invoices, &req_invoice.id).await?;

// Validate

let validate_req = common::metadata::forward(

ValidateInvoiceRequest {

invoice: Some(req_invoice.clone()),

existing: true,

},

req_metadata,

)?;

let validate_res = validate(app_state.clone(), validate_req).await?.into_inner();

if !validate_res.errors.is_empty() {

let status = validation_errors::to_grpc(validate_res.errors);

debug!(

validation_errs = status.message();

"Failure validating invoice"

);

return Err(status);

}

// Check access

// check_can_write(self, &existing, ¤t_account_id).await?;

// Store

saas_rs_sdk::storage::models::assign_message(&mut existing, &req_invoice)?;

existing.updated_by_account_id = Some(current_account_id);

let invoice: Invoice = app_state.config_dao.update(Bucket::Invoices, &invoice).await?;

// Return result

Ok(Response::new(UpdateInvoiceResponse { invoice: Some(invoice) }))

}

pub async fn validate(

_app_state: Arc<AppState>,

req: Request<ValidateInvoiceRequest>,

) -> Result<Response<ValidateInvoiceResponse>, Status> {

// Authorize

let _current_account_id = require_authorization(&req)?;

let req = req.into_inner();

let _invoice = req.invoice.unwrap();

let /*mut*/ errors = vec![];

// TODO

// if invoice.__some_field__.is_empty() {

// errors.push(ErrorObject {

// status: format!("{:03}", http::StatusCode::BAD_REQUEST.as_u16()),

// title: "Validation Error".to_string(),

// detail: "A __some_field__ is required".to_string(),

// ..Default::default()

// });

// }

Ok(Response::new(ValidateInvoiceResponse { errors }))

}Notice that placeholders were created to show you how to add validations for required fields and other business rules specific to your vertical.

Generating a Feature

Feature generators exist for some common capabilities which may be useful to your SaaS.

You can list the feature generators available to you, based on your current subscription, like this:

$ saas-rs list generators

┌──────────────────────┬─────────┬────────────────┬─────────────────────────────────┐

│ id ┆ type ┆ name ┆ description │

│ --- ┆ --- ┆ --- ┆ --- │

│ str ┆ str ┆ str ┆ str │

╞══════════════════════╪═════════╪════════════════╪═════════════════════════════════╡

│ d18nptv1t0sfku8vjl00 ┆ Feature ┆ api-keys ┆ Adds API Key management and au… │

│ d126jjn1t0s8usbjajpg ┆ Feature ┆ file-transfer ┆ Adds file upload+download capa… │

│ d1bc0dv1t0sda0dg0la0 ┆ Feature ┆ issue-tracking ┆ Adds issue tracking support to… │

│ d1bc2uf1t0sdd7jbftmg ┆ Feature ┆ service-broker ┆ Adds service broker support to… │

└──────────────────────┴─────────┴────────────────┴─────────────────────────────────┘

- api-keys — generates api key support for an existing service

- file-transfer — generates file transfer support for an existing service

- service-broker — generates service broker support for an existing service

Generate the API Keys Feature

The API Keys feature provides two things:

- It provides users of your service with the ability to manage their own API Key records in the Session Store

- It extends the authorization middleware to look for

Authorization: Bearer <an-api-key>authorization headers and to look them up in the Session Store

Generate the API Keys feature like this:

$ saas-rs generate feature --name api-keys --service user --version 1

The following content is added or changed in your Rust workspace:

crates/

├── session_store/

│ ├── src/

│ │ └── bucket.rs

└── user_server/

│ └── src/

│ └── v1/

│ ├── controllers/

│ │ ├── api_key.rs

│ │ └── mod.rs

│ ├── middleware/

│ │ └── authorization.rs

│ └── mod.rs

proto/

└── acme/

└── user/

└── v1/

├── api_key.proto

├── api_key_resource.proto

└── user_service.proto

And the following things occurred:

- a Session Store bucket enum variant was added for storing API Key records

- an API Key controller was generated with a full Session Store backed implementation

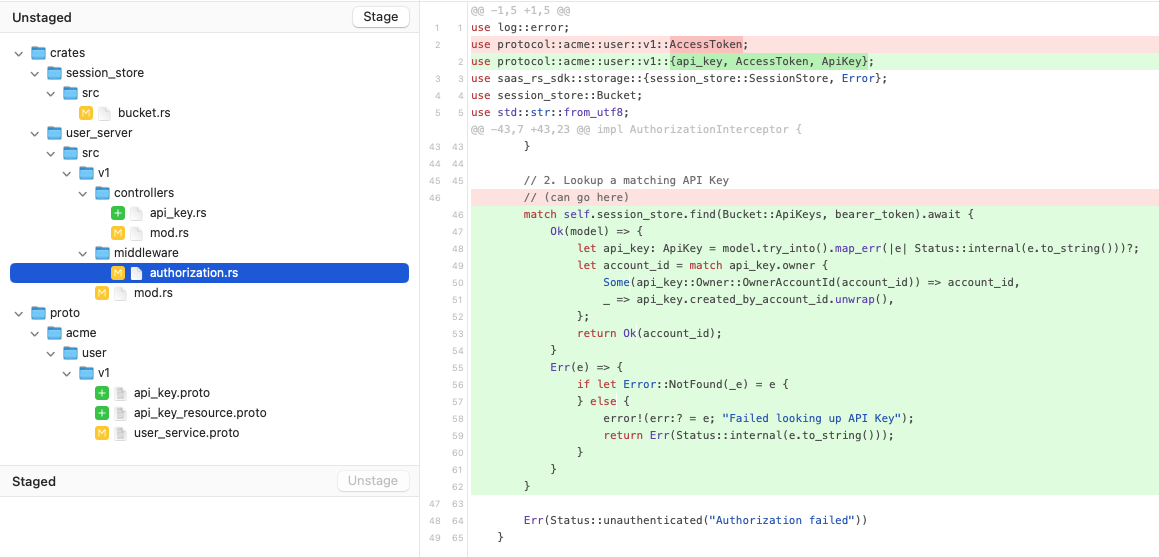

- the authorizaton middleware was rewritten and augmented with API Key record lookups in the Session Store when an

Authorization: Bearer ...header is present in inbound gRPC http requests - Protobuf files were generated for the API Key model and its associated resource, which supports your end users self-service managing their API Keys

A Fork based graphical diff view does the best job of showing the changes that were made in the authorization middleware module:

Generate the File Transfer Feature

The file transfer feature adds file upload and download support to your service endpoint, backed by the Object Store.

Generate the file transfer feature like this:

$ saas-rs generate feature --name file-transfer --service user --version 1

The following content is added or changed in your Rust workspace:

crates/

└── user_server/

│ ├── src/

│ │ └── v1/

│ │ ├── controllers/

│ │ │ ├── file.rs

│ │ │ └── mod.rs

│ │ └── mod.rs

│ Cargo.toml

proto/

└── acme/

└── user/

└── v1/

├── file.proto

├── file_resource.proto

└── user_service.proto

The File Controller contains full support for receiving file uploads, storing them in the Object Store, support for later download of the files, and metadata management for attributes such as filename and length.

Generate the Service Broker Feature

The service broker feature defines three new record types: the Service, Plan, and ServiceInstance.

If you're familiar with Heroku, CloudFoundry, or the

Open Service Broker spec

then you already know how these work.

- Services and Plans are typically managed through an admin-facing service or a corresponding Admin CLI.

- Services and Plans can typically be displayed as read-only through an end user-facing service or corresponding User CLI.

- Plan records are typically synchronized with a payment provider. For example, if you use Stripe, you would create a Stripe Product record for every SaaS RS Plan record. SaaS RS provides this synchronization code.

- ServiceInstance records are typically maintained by a Stripe Callback endpoint that you would build and use to detect

certain Stripe events such as:

- when a purchase is completed, create a

ServiceInstancerecord to track it - when a subscription is canceled, soft-delete the corresponding ServiceInstance record

- update your user service endpoint to check the active ServiceInstance records before deciding to allow certain operations

- when a purchase is completed, create a

Generate the Service Broker feature like this:

$ saas-rs generate feature --name service-broker --service user --version 1

The following content is added or changed in your Rust workspace:

crates/

├── config_store/

│ ├── src/

│ │ └── bucket.rs

└── user_server/

│ └── src/

│ └── v1/

│ ├── controllers/

│ │ ├── mod.rs

│ │ ├── plan.rs

│ │ ├── service.rs

│ │ └── service_instance.rs

│ └── mod.rs

metadata/

└── service_broker/

│ ├── service_1.yaml

│ └── service_2.yaml

proto/

└── acme/

└── user/

└── v1/

├── plan.proto

├── plan_resource.proto

├── service.proto

├── service_resource.proto

├── service_instance.proto

├── service_instance_resource.proto

└── user_service.proto

Bootstrapping ConfigStore Services and Plans with YAML files

The metadata/service_broker/ folder shows two example service definitions and their corresponding plans, defined in

YAML.

The first might be used if you want to charge a one-time membership fee when someone subscribes to it.

kind: Service

id: d1ui8n71t0s84oaqjc30

name: my-service-1

description: My Service 1

visible: true

planUpdateable: false

metadata:

displayName: My Service 1

---

kind: Plan

id: d1ui8n71t0s84oaqjc3g

serviceId: d1ui8n71t0s84oaqjc30

name: default

description: Monthly subscription

metadata:

displayName: Default

costs:

- id: d1ui8n71t0s84oaqjc40

unit: initiation fee

amount:

usd: 100

The second might be used if you wanted to offer the service as a subscription, using variously priced plans:

kind: Service

id: d1ui8n71t0s84oaqjc4g

name: my-service-2

description: My Service 2

visible: true

planUpdateable: true

metadata:

displayName: My Service 2

---

kind: Plan

id: d1ui8n71t0s84oaqjc50

serviceId: d1ui8n71t0s84oaqjc4g

name: basic

description: Basic

metadata:

displayName: Basic

bullets:

- first feature

costs:

- id: d1ui8n71t0s84oaqjc60

unit: per month

recurringInterval: month

amount:

usd: 20

---

kind: Plan

id: d1ui8n71t0s84oaqjc5g

serviceId: d1ui8n71t0s84oaqjc4g

name: professional

description: Professional

metadata:

displayName: Professional

bullets:

- first feature

- second feature

costs:

- id: d1ui8n71t0s84oaqjc6g

unit: per month

recurringInterval: month

amount:

usd: 50

Notice that records are given pre-allocated static XIDs, which are committed to Git. These IDs would also occur as consts in your Rust code. Keep in mind that once you've created at least one service instance for a given plan, you can no longer make changes to the plan, and your only path forward is to discontinue the plan and completely replace it with a new plan that has different parameters. It's up to you to decide how long to service your customers on an old plan. I've seen some managed service providers offer customers 6 months to get off of a plan that is being retired.

A bootstrap mechanism is required to upload these YAML files into corresponding Service and Plan buckets in your

ConfigStore. An Admin CLI is typically perfect for this.

Synchronizing Services and Plans with Stripe

Once Service and Plan records are defined in your ConfigStore, they need to be synchronized with Stripe. For every

Plan record, you will want to create a Stripe Product record, and corresponding Stripe Price records.

SaaS RS provides the pre-built code that performs this synchronization in the SaaS RS SDK crate. See the src/payments/adapter/stripe/ directory for more information.

Once your Stripe Product records exist, you can log into the Stripe console and test by generating a new invoice or

subscription that contains one or more products. Then your web front-end team may decide to offer end users a

self-service way to do this, to automate it. This is only suitable in situations where you're willing to disclose

your pricing. This would typically not be the case for professional services oriented service offerings.